12. TensorFlow Cross-Entropy

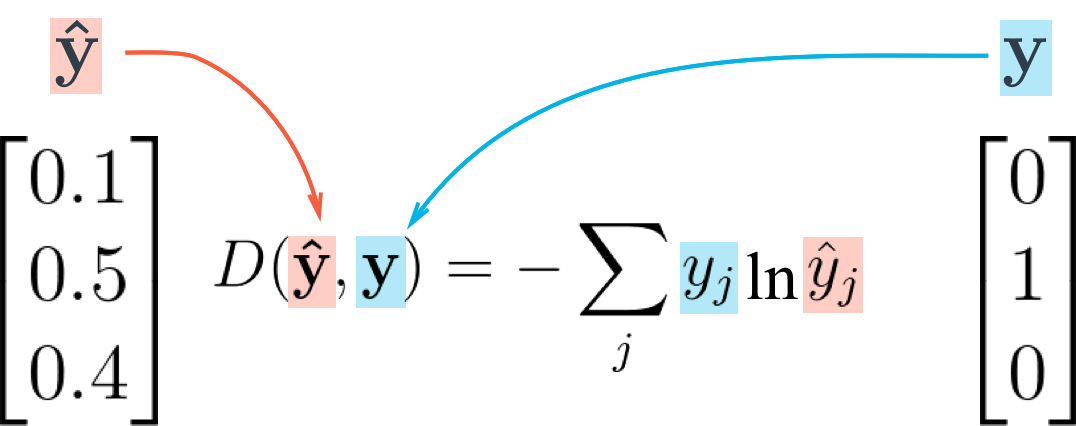

We previously covered the equation for multi-class cross-entropy, also shown above. The softmax function outputs the probabilities for each class of image that we have such that the probability of the correct class is close to 1.0, while those of the remaining classes are closer to 0. The cross-entropy function helps us to compare these probabilities to the one-hot encoded labels.

15 Tensorflow CrossEntropy V1

Let's take what you learned from the video and create a cross entropy function in TensorFlow. To create a cross entropy function in TensorFlow, you'll need to use two new functions:

Reduce Sum

x = tf.reduce_sum([1, 2, 3, 4, 5]) # 15The tf.reduce_sum() function takes an array of numbers and sums them together.

Natural Log

x = tf.log(100) # 4.60517This function does exactly what you would expect it to do. tf.log() takes the natural log of a number.

Quiz

Let's implement and print the cross entropy when given a set of softmax values, and corresponding one-hot encoded labels.

Start Quiz: